Airflow 3.0: Architectural changes

The most exciting Airflow release in years is on the horizon, and its coupled with big architectural changes.

Airflow 3.0 is the biggest release for Apache Airflow in years. It brings exciting new features such as external task execution, explicit versioning of DAGs, proper event based scheduling and more. In addition, the Airflow will modernize its web application by decoupling the API and UI and in addition giving Airflow a fresh new look.

Coupled with all of the above come a few significant architectural changes. In this post I will walk you through these changes in detail. We’ll be going through the following

Native support for external workers

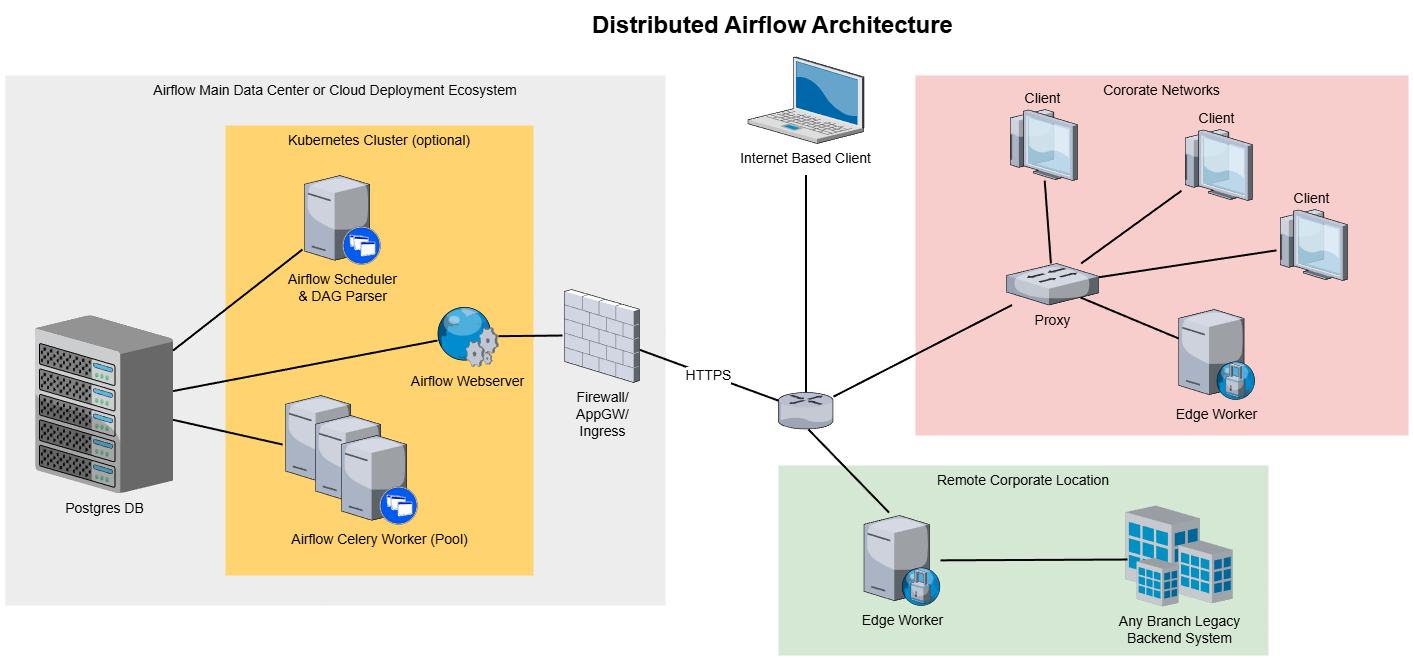

Until Airflow 3.0, Airflow workers would typically be deployed inside the Airflow deployment, e.g. as Kubernetes pods or as Celery workers. If you’ve ever deployed Airflow yourself you probably are familiar with the concept of configuring Helm charts to accommodate a certain Airflow worker set-up. This process can become quite tedious.

With the introduction of Deferrable tasks, it is already possible to run asynchronous workflows and freeing up the Airflow worker slot. And while theoretically possible (SSHOperator anyone?), up until now Airflow did not natively support running workers on remote machines. With Airflow 3.0 this all changes.

Workers will no longer have to be contained in the Airflow deployment. Airflow refers to external workers as "edge executors". This means that a few things will change

Workers can no longer access the Airflow database. By default all Airflow services have access to the Airflow database as it’s responsible for state tracking. By decoupling workers and the Airflow database it becomes possible to run workers remotely. This is primarily facilitated through the new Task SDK.

Workers will communicate through the Airflow REST API. Authentication of the external workers will be done through JWT or ID tokens.

Workers will no longer be bound to the Airflow packages (or custom image). There will no longer be a dependency on the packages that are installed inside the image that is bundled with the Airflow deployment. A separate provider package will become available that is much more light-weight than the Airflow base image. This package will provide the tools necessary to run the external worker.

A modern web application for Airflow

People tend to forget how awful the Airflow UI was back in the day. Airflow 2.0 came with a significant facelift to how to the Airflow looked. However, in the background not much changed.

One of the main culprits is the core dependency on the Flask App Builder (also known as the infamous FAB). The FAB has been convenient to set-up the Airflow UI on top of the original Flask app. However, nowadays it falls short in many areas. It's the primary reason it's difficult to customise the UI (e.g. terrible dark mode), move to a near real-time UI and attract more front-end talent to contribute.

Airflow 3.0 moves away from FAB and brings and new modernised tech stack. There’s a few architectural changes to highlight

The UI and API will be fully decoupled. The focus will be on maturing the API. In turn this means the UI will be fully built on top of the Airflow REST API.

A modern front-end stack is selected. React (TypeScript, React Query) together with Chakra UI components will be powering the Airflow UI going forward. The hope is that this attracts more front-end talent to contribute to the Airflow project. In addition, React opens tons of possibilities, e.g. customisation, themes, component libraries to name a few.

Custom web-views will support React plugins. Custom web-views is a way to customise the Airflow UI, previously Flask templates had to be used. The move to React based plugins means more freedom and customisation. This customisability opens the potential of using the Airflow UI outside of the typical data engineering scope.

The above means that the Airflow web application will enter a new era. Given that these are fundamental changes it's likely the roll-out will be staged. Meaning that the dependency on FAB will most likely not be gone until later builds, after 3.0 has been released.

Data assets powering event driven workflows

Airflow from its inception has been about running scheduled workflows. Its origins stem from CRON based workflows, which to this date is a popular way of scheduling data tasks. However, in recent years the focus in data orchestration has shifted towards more event driven workflows.

In this area Airflow is facing increased competition from tools like Dagster, which has been build around the concept of Data assets. Data assets are a way for Dagster to be "data-aware", meaning that its DAGs have context around the data it's processing. To combat the rise of data-aware orchestration tools, in version 2.4, Airflow has added support for data-aware scheduling through Datasets. This was the first step of moving towards data-awareness.

However, the implementation evolves only around connecting Dataset dependencies. This brought a new challenges

Actual data aware triggering has not natively been added. While it’s possible to trigger DAGs programmatically or write your own dataset polling system, Airflow has never natively supported data-aware triggering.

The concept of "Dataset" doesn’t extend well into the ML world. As a Dataset could also translate to a ML model or an LLM prompt.

Hence, Airflow is to rename Datasets to Data assets and work on actual event driven workflows. In addition, some exciting features are coming to Data assets

Subclasses of Data assets will be supported. Data asset subclass such as Datasets and Models will be available, to separate out different kinds of Data assets.

Data assets will natively support partitions. These can be partitions such as time based partitions or segment based partitions.

Polling-based workflows will be supported for Data assets. Event based workflows using polling systems (and in the future external push based triggers) will be built into the Data assets framework.

Additional reading material

If you want to read up on the latest developments in Airflow 3.0 yourself, I've compiled of resources

Airflow 3.0: External workers

This is the first edition of The Data Canal newsletter. If you've enjoyed this post, do not forget to subscribe. This newsletter will contain insights into data & AI tools, architecture and career. Stay tuned for the next editions.