Current trends in the field of data analytics

Data analytics capabilities are top of mind for companies. With the rise of self-serve analytics, embedded analytics and AI agents for analytics, where are we headed?

The field of data analytics is rapidly evolving. In the past few years many companies have been focusing on more data-driven decision making. This is underlined by increased investments (up 54% in 2024) into data analytics capabilities as revealed by a recent CX study. Companies are realising that having solid data foundations is necessary to fully harness advanced applications like predictive analytics, GenAI and AI agents.

Here are a few underlying trends that we'll focus on in this post

Self-service analytics is top of mind for many companies. Non-technical stakeholders are to fill their own analytics needs and customers are directly served through embedded analytics. What are some of the fundamentals that need to be in place for self-serve? What are the pitfalls of embedded analytics and how to optimise the experience?

Nowadays tools like Databricks, Snowflake and BigQuery offer vast data ecosystems that can power almost any data product. Nevertheless, even when using such an ecosystem there is still plenty of maintenance and up-keep necessary. How does one navigate this? What are the complexities that companies face when setting-up and managing these tools?

GenAI and AI Agents are at the verge of reshaping the data analytics field. What will this mean for data professionals? Is this the end of dashboards as we know them?

1. The rise of self-serve analytics

Self-service analytics has the potential to enable users to directly access and interpret data without heavy reliance on tech or data teams. This shift is driven by the need for faster decision-making and the democratisation of data across organisations. Companies that strive for self-service data analytics sometimes overlook a crucial point, the data fundamentals need to be solid for self-serve to succeed.

The required data fundamentals

Self-service analytics relies heavily on the availability of accurate, consistent and well-documented data. If this not, to a certain degree, guaranteed it will inevitably lead to the following struggles

Without well-documented data, it becomes difficult if not impossible for users to navigate through a self-service set-up.

Without accurate and consistent data, users will quickly lose trust in the data. Often forcing them to abandon usage of it.

When setting up self-service data analytics, data dictionaries come into play to provide adequate data documentation. A data dictionary serves as a centralised repository of metadata, providing definitions, formats, and relationships between data elements. For non-technical users, this is crucial to obtain understanding of the data and to ensure correct usage.

To ensure accurate and timely data, data contracts are a critical component. These are agreements between data producers and consumers that define the structure, format, and quality expectations of data. In a self-service environment, data contracts ensure that changes in data pipelines or sources don't disrupt downstream analytics. They act as a safeguard, maintaining consistency and reliability across an organisation.

Data quality assurance is equally important for the consistency and quality of data. Poor data quality can lead to flawed insights and misguided decisions. Automated data quality checks, such as validation rules, anomaly detection, and completeness checks, help maintain the integrity of the data. For self-service analytics to succeed, organisations must invest in robust data governance frameworks that include these elements.

Without the above it's very challenging to achieve a successful self-serve analytics set-up. Here are a few pointers if you're striving to achieve self-service analytics

Use readily available governance tooling. When it comes to building and improving data documentation, there are many tools that can help. For example data catalogue tooling such as DataHub (open-source) and Alation can significantly speed up organisation wide data dictionary implementation.

Enforce usage of data dictionaries. It's important that data dictionaries are enforced through standardised query and data models such that definitions are guaranteed to be aligned across an organisation.

Shift responsibilities to early in the process. I recommend reading up on the concept of a Shift Left data approach. It evolves around placing data governance early in the process and shifting focus more towards the producer the data (shifting responsibilities left, i.e. earlier in the process). Platforms like Gable.ai are built with this in mind and also support data contracts out-of-the-box. Find more resources on data contracts in this GitHub repo.

Data quality assurance by using frameworks. Data quality frameworks like Great Expectations, Soda Core or dbt tests (all available open-source as well as hosted) make it exponentially easier to implement data quality assurance in your data pipelines. These also integrate well into tools like Airflow, dbt and Dagster.

Optimising the customer's analytics experience

Embedded analytics, which integrates analytical capabilities directly into customer-facing (mainly SaaS) applications, is becoming increasingly popular. It allows customers to access deep data insights without leaving the platform, enhancing user experience and engagement. Embedded analytics faces similar problems as internal self-service analytics, as embedding analytics often goes hand-in-hand with self-serve for customers. There are however additional challenges compared to internal analytics

Performance issues: Many embedded analytics applications are poorly optimised for being embedded into an existing front-end. From long loading times to poor query performance.

Data security: Embedded analytics requires careful handling of sensitive customer data. Organisations must implement robust security measures to ensure that users only have access to specific data. Row level security and role-based access controls are tools that are often required to be able to offer embedded analytics.

Complex user interface: A lot of embedded analytics tools are BI tools that offer embedding capabilities. These are often coupled with complex interfaces that can quickly overwhelm users.

Inadequate UI customisation: Another angle is the poor UX customisation that is often offered in embedded analytics tools. In many cases only color schemes and font can be adjusted, which means that the experience will never feel like your application.

Here are a few recommendations to deal with the above

Before starting with embedded analytics, ensure that internal data fundamentals are in place first. Concepts like data dictionaries, data contracts and data quality assurance will ensure that data can be more confidently shared with customers directly.

When picking an embedding analytics tools, look at native embedded analytics tools like Embeddable or Luzmo. These are specifically build with embedded analytics in mind and allow for highly customisable and performant experiences out-of-the-box.

2. Leveraging a data ecosystem

The adoption of tools like Databricks and Snowflake has revolutionised data processing and analytics capabilities. These platforms offer unparalleled scalability, speed, and flexibility, enabling organisations to handle massive datasets and perform complex analyses. Let’s dive into what makes these tools unique.

Databricks vs Snowflake

2024 has been the year of Databricks vs Snowflake. But what defines each solution and what sets them apart?

Databricks, for instance, has become an one-stop-shop for big data processing (Apache Spark) and machine learning (MLflow, and more). Its unified analytics platform allows data engineers, data scientists, and data analysts to collaborate seamlessly, e.g. its notebook solution is one-of-a-kind in terms of features and sets itself apart from alternatives like Colab Enterprise and Synapse notebooks. With capabilities like Delta Lake and Delta Live Tables, Databricks sets the stage for data reliability, consistency and data streaming use cases. Together with its tight integration to cloud platforms like AWS and Azure it is a preferred choice for organisations that have the technical capabilities to harness Databricks to its full potential.

Snowflake, on the other hand, has redefined cloud data warehousing with its ease of use and near-zero maintenance solution. For many organisations Snowflake has enabled data teams to focus on the data (SQL anyone?) itself rather than infrastructure, code and/or scalability problems. It also was one of the first to feature an architecture that separates storage and compute. This has enabled organisations to reduce complexity and be able to process vast amounts of data without the need for continuously running servers. This made Snowflake a go-to solution for organisations that want a powerful out-of-the-box data warehousing solution. Nevertheless, like Databricks, Snowflake has expanded its data capabilities now offering a data ecosystem that also powers ML applications to advanced analytics.

All-in-all, there is no wrong choice when going with either solution. But for more specialised use cases Databricks might just offer a bit more control and capabilities, while Snowflake is definitely the best choice for less technical teams.

The pitfalls and how to mitigate them

While these tools are convenient to use, the management of them can come with significant challenges

Analytics costs can quickly spiral out of control, especially without proper optimisation and controls coupled with a pay-as-you-go pricing model for many of these tools.

Even when betting one on data ecosystem, you might still need many other tools. ETL, ELT, data warehouses, transformation layers, orchestration, BI dashboards, reverse ETL. Most likely you will need to introduce other tools to cover all data use cases, the complexity of managing many different tools will probably still be a problem in the world of data.

New tools, best practices, and industry trends emerge constantly. What worked two years ago might be outdated today. While Databricks or Snowflake rule today, this might be different in the years to come.

Talent in the market is scarce. People with proper experience in setting up and maintaining data technology stacks are scarce. Especially if you're looking for specific expertise you might be out of luck or have to pay a premium, take this into account.

Here's a few recommendations to mitigate the above:

Keep your tech stack simple. Where possible keep tooling options limited to one choice. Consolidate where possible. Buying into a single ecosystem can significantly simplify things and usually make hiring easier.

Set budgets and monitoring alerts. First of all you should understand the usage patterns. With this you can set-up the appropriate budgets and monitoring. In addition methods like caching, pre-aggregating data and putting guardrails in place, e.g. a required filter can reduce the risk of excessive costs.

Plan ahead as early as possible. Think about what is necessary to scale your data solution from an architecture and people perspective as early as possible and make sure to plan ahead.

Keep up-to-date. Follow industry leaders, join conferences, and look at learning opportunities (bootcamps, courses, etc).

While the mentioned tools can technically power any data product, it's good to also consider alternatives:

When already bought into the Google Cloud Platform (GCP) offering, a solution like BigQuery might be sufficient. While it might not offer everything that solutions like Databricks or Snowflake has, it does come with neat integrations with Google offerings and has a free tier (1 TB querying for free every month).

When only dealing with small datasets (e.g. up to millions of rows) a single or multiple PostgreSQL instance(s) might be sufficient. This can significantly reduce the complexity of your data technology stack.

When near real-time analytics is important, ClickHouse might be an interesting option. It better capabilities to handle large streaming data use cases than some of the above.

When it comes to a data analytics technology stack there's a lot more choices to make. If you want to learn more about building a data platform from scratch I recommend watching the talk I did at PyData Amsterdam 2024.

3. AI agents to disrupt data analytics

The rise of AI agents is going to disrupt the field of data analytics more than any other data discipline. In the past, proficiency in SQL and a business intelligence (BI) tool like Tableau or Power BI was enough to secure a role in data analytics. However, since the introduction of GenAI, the landscape is rapidly changing. AI agents are on the verge or are already capable of automating many tasks that were once the domain of data analysts, from querying databases to generating visualisations and dashboards.

Text-to-SQL: The crucial building block

One of the earliest applications of GenAI in analytics has been Text-to-SQL. This allows users to query databases using natural language instead of writing SQL queries. For example, a non-technical user could ask, "What were the total sales in Q3 for the European region?" and the AI agent would automatically generate and execute the corresponding SQL query.

Tools like OpenAI's Codex, Microsoft's Power BI Q&A, Databricks Genie and open-source libraries such Vanna.AI and LangChain+LangSmith are leading the charge in this space. These tools not only lower the barrier to entry for performing data analysis but also significantly reduce the time spent on writing and debugging SQL queries. However, coming back to the first section of this post, the success of text-to-SQL systems depends heavily on the quality of the underlying data model and metadata. Without well-defined data dictionaries and relationships, the AI may struggle to interpret user requests accurately.

Text-to-SQL lays one of the fundamental building blocks of making successful AI agents for data analytics, applications like Conversational analytics and Analytics AI agents.

The potential of AI agents

Usage of GenAI based chatbots like ChatGPT, Deepseek Chat and Claude.AI are already simplifying a lot of data analytics tasks. This however requires human interaction and manually extracting any useful outputs. AI agents on the other hand hold significant potential to further revolutionise data analytics. By leveraging GenAI and further integrations, autonomous agents can automatically analyse datasets, derive conclusions and potentially take direct action.

For instance, an AI agent could be capable of interpreting the result of an A/B experiment, independently evaluate performance and without intervention implement the successful variant. For its human supervisors it would only need to output a detailed report on the results.

However, while AI can automate many of these technical aspects, the human touch remains critical for nuanced interpretation, strategic decision-making, and aligning insights with business goals. Data analysts may find their roles evolving from data crunching to focusing on higher-value tasks such as validating AI-generated insights, refining narratives for stakeholder alignment and driving data-driven strategy. In this way, AI agents are less about replacing data analysts and more about augmenting their capabilities, enabling them to deliver greater efficiency and impact.

What about dashboards?

Another area where AI agents are starting to make an impact is in the automatic generation of dashboards and visualisations. Tools like Plotly, Streamlit and Dash are being integrated with AI-driven workflows to create dynamic, interactive dashboards with minimal human intervention.

For example, an AI agent could based on specific user needs independently analyse a dataset and generate a dashboard complete with charts, tables and insights, all in a matter of minutes.

This agentic approach not only speeds up the dashboard creation process but also ensures that visualisations are tailored to the specific needs of the user. For example, an AI agent could automatically highlight outliers, apply relevant filters, or suggest additional metrics based on the dataset's characteristics. BI Tools like Looker, PowerBI and Tableau are actively integrating AI capabilities, enabling users to create visualisations with simple natural language prompts.

But where are we now?

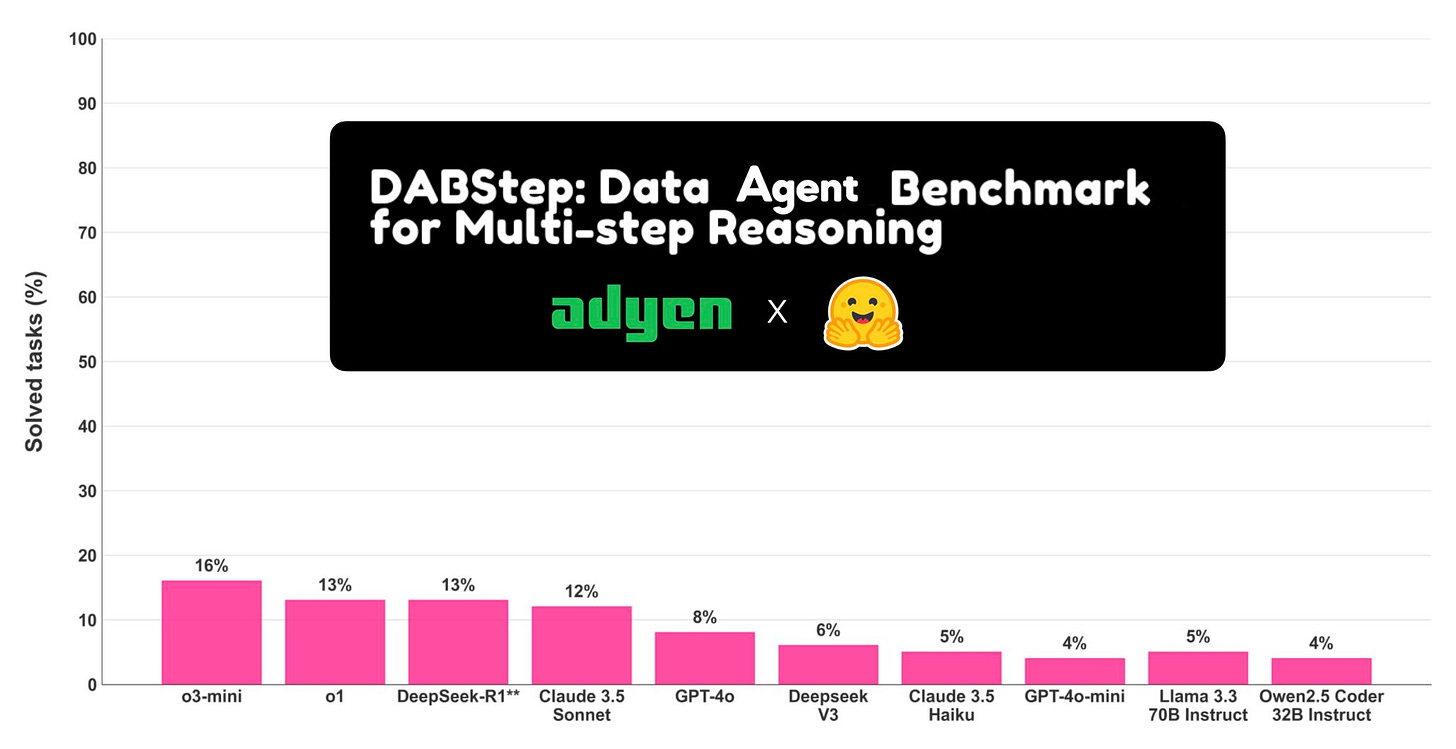

Just this week, Huggingface and Adyen have released a benchmark to evaluate LLMs on certain data analytics tasks using multi-step reasoning. Shocking even the more sophisticated reasoning models struggle with more difficult data tasks.

In summary, the models are quite capable of performing easy data tasks, while they struggle with the more difficult tasks. For more an in-depth analysis, go ahead and check out the blog.

Challenges and considerations

While the potential of AI agents in data analytics is immense, there are challenges

Mistakes and reputation risk: Autonomous AI agents come at the risk of making high-impact mistakes. This can potentially also harm the reputation of a company especially if the AI agent is to blame for a mistake.

Necessary human oversight: It is necessary to implement sufficient controls to confidently roll out autonomous AI agents. We're on the verge of seeing what's possible, but this also means the technology still has to mature. Benchmarks today tell us that big leaps still have to made for them to do difficult data analytics tasks autonomously.

Shifts in skills: As AI takes over routine tasks, data analysts will need to up-skill in areas like data storytelling, driving business decision-making, and AI model interpretation to remain relevant.

4. The future of data analytics

The future of data analytics will be characterised by greater accessibility, increased automation and a shift in the skill sets required for data professionals. Organisations that invest in solid data foundations, embrace AI responsibly and adapt to these changes will be well-positioned to harness the full potential of data analytics in the years to come.

However, the success of these technologies hinges on robust data fundamentals such as data dictionaries, data contracts, and robust data quality assurance. In addition, the successful implementation and up-keep of data ecosystems is crucial to keep data accessible and be able to process potentially large quantities of data.

In summary, my belief is that

Self-service analytics and embedded analytics will continue to grow, enabling more stakeholders to access and utilise data without heavy reliance on technical teams. This democratisation will accelerate decision-making and innovation but will require organisations to invest in robust data governance and quality assurance to maintain trust and accuracy.

We will see a consolidation of data technology tooling: As data ecosystems grow in complexity, organisations will face challenges in managing costs, tool maintenance and scalability. Simplifying tech stacks and tooling will be critical to maintaining efficient and effective data operations.

Required skills in data analytics will change. The role of data analysts will evolve as tasks like database querying and dashboard creation can increasingly be offloaded to AI. Data analysts will likely focus more on business-related tasks such as data storytelling, helping drive business decisions and interpreting AI generated results.

As the field continues to evolve, staying ahead will require a combination of technical expertise, strategic thinking and a willingness to embrace new tools and methodologies. The future of data analytics will be about bringing together technology and business further.

You have read the second edition of The Data Canal. The Data Canal is a newsletter for data professionals about data tooling, data architecture and careers in data. If you have enjoyed this post, make sure to subscribe for more.